Why DIU is looking to speedily buy deepfake-detection technology

With aims to confront and mitigate the disruptive, still-emerging threats posed by deepfakes, the Pentagon’s Defense Innovation Unit is moving to quickly adopt commercially made capabilities to attribute and detect such fabricated or manipulated multimedia and online content.

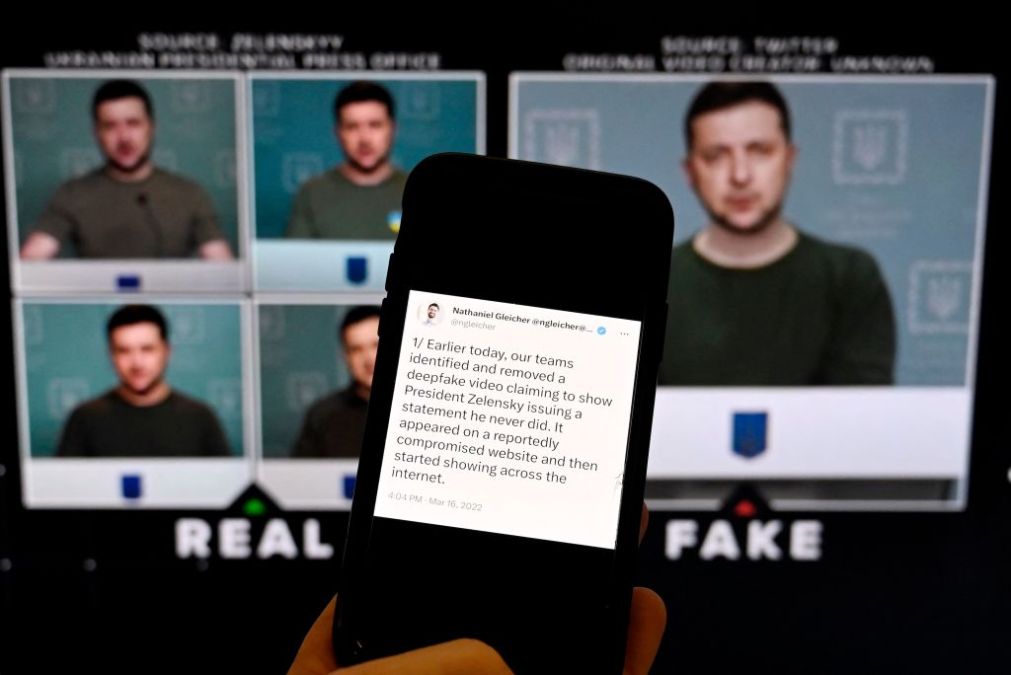

Deepfake technology, broadly, applies machine learning and artificial intelligence capabilities to “face swap” individuals or create synthetic audio and visual media that can portray people who appear to say and do things that they did not.

Said to have first arrived in 2017, such altered material — which continues to become more widely available, simpler to use, and harder to detect — has been generated and disseminated more and more online, including in the production of fake pornographic videos targeting celebrities and in campaigns against major political figures.

In one relatively recent example, a heavily manipulated deepfake circulated on social media that showed Ukrainian President Volodymyr Zelenskyy appearing to tell his military to surrender in the war against Russia.

“This technology is increasingly common and credible, posing a significant threat to the Department of Defense, especially as U.S. adversaries use deepfakes for deception, fraud, disinformation, and other malicious activities,” DIU officials wrote in a Commercial Solutions Opening that closes this week.

To address this issue, the Pentagon’s innovation hub intends to rapidly field deepfake detection and attribution solutions. The unit wants entities that can meet its detailed criteria and “desired solution attributes” in the CSO to apply for collaboration and possible investment.

Historically, particularly in election years, deepfakes have been employed and gone viral against popular political candidates.

But that’s not what motivated DIU to launch this specific opportunity, which comes ahead of the 2024 election.

“Deepfake analysis and detection is increasingly important for the DOD at all times, independent of election cycles. Deepfake detection, analysis, and identification have been department priorities and it is a great time to leverage commercial solutions to aid in this critical technology area,” a DIU spokesperson told DefenseScoop.

“Most recently, the RSA Innovation Sandbox 2024 winner presented a voice realism solution that they plan to adapt and scale. DIU’s interest piqued this year as the maturity of the commercial market has reached a level that the department is interested in exploring,” the official explained.

All projects submitted for this CSO will need to comply with DIU’s Responsible AI Guidelines and align with an open systems architecture approach, according to the post. Solutions proposals will be accepted through June 27.

Notably, the Defense Advanced Research Projects Agency has been building tools to quickly pinpoint deepfakes and really get at large-scale, automated disinformation attacks since at least 2019.

When asked whether the new DIU opportunity suggests, then, that DOD wants to buy such capabilities now as opposed to just making its own, the spokesperson told DefenseScoop that the innovation hub works with its “DOD partners, like DARPA, in a complementary but distinct way to prototype and integrate solutions from cutting edge industry solution providers.”

“Due to the maturity of the market expressed above, DIU is excited to support the department mission partner in this critical technology area,” they said.